In this article I am going to discuss an alternative approach to using GPIO on a Raspberry Pi in Python to that taken by an early version the RPi.GPIO package[1]. The approach occurred to me in 2012 while making a start at hardware interfacing and programming using a Raspberry Pi with some LEDs, switches and the like that had been collecting dust for 20 to 30 years. To read and write data to the GPIO lines I thought I would start with Python and the RPi.GPIO package.

After playing with RPi.GPIO it became clear that it had a few shortcomings, most obvious of which was that there was no support for waiting on a change in GPIO input pin value. You had to poll input pins to read their value and could not wait for input pin state change notification events. I knew the sys filesystem GPIO interface supported this as I had read the Linux kernel documentation on GPIO[2] and even the GPIO sys file system supporting source code used by the Raspberry Pi’s Linux kernel[3].

There were other ways that RPi.GPIO went about things that seemed a bit awkward to me such as the fact that each read or write needed to open, write to and close various files in addition to the read or write associated with the GPIO operation. Another was the pin identification number mode used to indicate whether pin numbers represented raw GPIO pin numbers or Raspberry Pi P1 connector pin numbers. It all worked but seemed a bit clunky and inefficient. As RPi.GPIO was at an early stage of development I would not be surprised if more recent versions have addressed many if not all of my concerns.

Putting my research into the Linux user space sys filesystem GPIO support to good use I had hacked together some proof of concept Python code that allowed waiting on input pin edge events. The additional I/O for each pin read or write I thought could be reduced by having some concept of an open pin - which implies a matching concept of a closed pin. Once I started thinking in terms like open and close the idea to look at Python’s stream I/O for possible concordance popped into my head (I should note that I am not a full time Pythonista). After some poking around I found the open and I/O documentation pages for Python 3[4][5].

This lead me to become side tracked from other Raspberry Pi I/O interfacing I had intended to look into to investigate further whether a similar model could be used for GPIO, that is: an open function returns an initialised object for the I/O operations requested that is associated with a GPIO pin. Like the Python 3 stream I/O implementation the exact type of the object returned would depend on the requested I/O mode for a pin. It also occurred to me that the abstraction could be extended to cover groups of pins.

The results of my investigation are freely available on Github[6] as the Python 2.7 package dibase.rpi.gpio. It currently is not a fully packaged Python module and I have only updated the pin id support to cater for the Raspberry Pi revision 2.0 boards' GPIO pin layout and not the newer B+, A+ and compute module variants. Documentation is available on the Github repository’s wiki[7], which explains how to use the package. In the rest of this article I would like to go into some of the design decisions and implementation details.

The Big Picture

As I have mentioned I was basing the overall 'shape' of the package on that of the Python 3 stream I/O. In addition to an open function and the types for the objects returned by open the Python 3 stream I/O defines some abstract base classes, some of which provide default implementations for some methods, which as a long time C++ practitioner seemed perfectly reasonable to me.

With stream I/O we use a pathname string to identify the item to be opened. For GPIO, pins are identified by a value that is in a (small) subset of the positive integers. It seemed to me some concept of a pin id would be useful to validate such values and to support both Raspberry Pi P1 pin values (and maybe names) and the underlying chip pin id values.

One of my pet hates in code are magic values - numbers definitely and often strings as well - and the GPIO sys filesystem interface has many magic strings to build pathnames and for special values written to files. So some way to abstract and centralise such magic knowledge seemed appropriate.

As with most code modules there would almost certainly be a set of errors, in this case most likely in the form of exceptions that would be required.

So to recap the package would require:

-

an open function - in fact I ended up with two: one for single pins and one for groups of pins

-

GPIO abstract base classes

-

a set of concrete classes fully implementing the ABCs to handle the GPIO operations

-

pin id value, validation and mapping abstractions

-

abstractions for magic knowledge such as pathnames

-

exception classes

What Bases?

The set of abstract base classes and the operations they support would need to be different from the stream I/O case. While there would be a case for a root GPIO abstract base class similar to the Python 3 IOBase type that declared operations common for all GPIO modes, the set of those operations would differ. In particular it seemed a good idea to separate out the read and write operations into separate sub-abstract base classes as bidirectional I/O is not supported by the BCM2835 chip used by the Raspberry Pi - that is a GPIO pin may be used either for input or output but not both at the same time. A further distinction was whether read operations block on edge events or not.

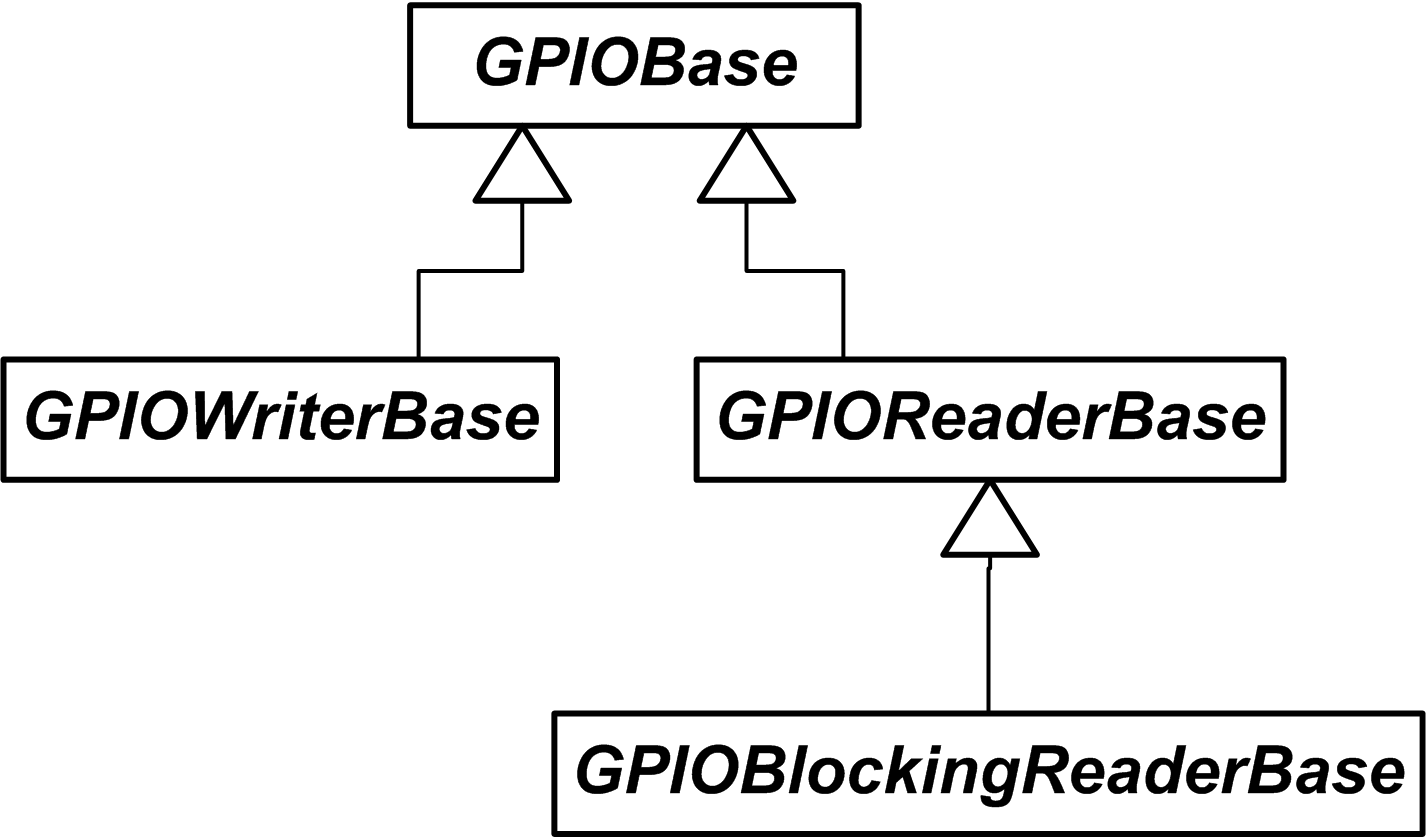

My first thought was that GPIO blocking reader types would be a sub-type of (non-blocking) GPIO reader types:

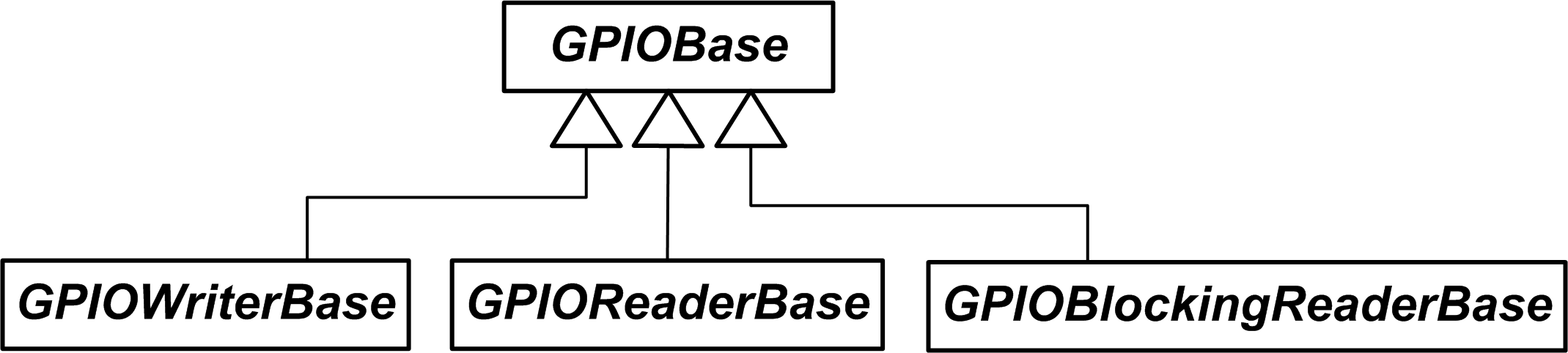

But when actually testing and implementing a blocking reader type it became apparent that the read operation would benefit from a timeout parameter which, obviously, is not required in the non-blocking polling only reader case, so the actual GPIO abstract base class hierarchy ended up like so:

For the GPIOBase class I started by cherry picking obviously useful methods from IOBase: close, closed, readable, writable

To distinguish blocking readers from non-blocking readers I added a query method in the style of readable and writable: blocking

As a GPIO pin was represented in the sys filesystem by a specific file path that would be open so as to be written to or read from there would be a file descriptor that could be said to be associated with each pin so I also initially included a fileno method. However, later on I realised this did not scale to the pin group case, as a group of pins would be associated with a group of file descriptors so the fileno method of GPIOBase was replaced by a method called file_descriptors instead which returns a list of file descriptors. The fileno method was then defined for each single GPIO pin implementation class in terms of the file_descriptors method.

The readable, writable and blocking query methods are defined by the GPIOWriterBase, GPIOReaderBase and GPIOBlockingReaderBase to return True or False as appropriate.

The GPIOWriterBase, GPIOReaderBase and GPIOBlockingReaderBase base classes also add the appropriate write or read operation. write takes the value to be written and read returns the value read; the GPIOBlockingReaderBase read also takes a timeout parameter. Implementations can take advantage of the Python type system so that the values read or written can be either single or multiple GPIO pin values in various forms allowing single pin and group of pins implementations to use the same interfaces.

What did you want to open?

With stream I/O what is to be opened is specified by a pathname string. As mentioned GPIO pins are identified by a small positive integer specific to the chip(s) in question. These numbers, formatted as strings, are used in the GPIO sys file interface. The raw values for the Broadcom BCM2835 chip used in the Raspberry Pi have values in the range [0,53]. However only a subset of these are wired up for user use on the Raspberry Pi board via the P1 header connector, and the unpopulated P5 header added to revision 2.0 boards. Most of these GPIO pins have alternative functions other than simple GPIO as detailed in the Broadcom BCM2835 ARM peripherals document[8].

I wanted to be able to refer to pins in various ways: raw chip GPIO pin number, Raspberry Pi P1 connector pin number and, preferably, by a name indicating the function of the pin, as used by the Raspberry Pi circuit diagram[9] and elsewhere. Additionally it would be nice to prevent inadvertently specifying a raw GPIO pin number which was not available via the P1 connector, but not prevent specifying such pins altogether. At the time I was thinking all this there was only one revision of the Raspberry Pi circuit boards so there was no P5 and no complications as to which chip GPIO pins were brought out to which P1 pins. Sometime after the initial implementation I got around to extending the pinid module to support Raspberry Pi revision 2 board GPIO[10] - including P5 - with mapping and validation performed with respect to the revision of the Raspberry Pi board in use.

The design I went with has a primary class called PinId which is supported by various validation and mapping classes. PinId extends int and provides class methods to create pin ids from integer values that represent either raw GPIO pin numbers (gpio, any_chip_gpio) or Raspberry Pi P1 and P5 connector pin numbers (p1_pin, p5_pin). In all cases a PinId object is returned if the passed value is valid otherwise an exception is thrown. The value of this object is an int in the range [0,53] representing a BCM2835 GPIO pin value. The validations and possible mappings performed vary for each of these factory methods: gpio and any_chip_gpio do no mapping but check the value is in the valid set of pins: [0,53] for any_chip_gpio, and only the GPIO pin numbers of those pins that are connected to the P1 or P5 connector for gpio. p1_pin and p5_pin first map the passed pin value from a P1 or P5 pin number to the BCM2835 GPIO pin it connects to, if any, and if good returns the value from gpio for this mapped pin number.

In addition to the four factory methods PinId also provides class methods named for each Raspberry Pi P1 and P5 connector GPIO pin, each having the form pN_xxx, where N is 1 or 5 and xxx is the function name of the pin in lowercase - p1_gpio_gclk for example. Each of these methods takes no parameters (other than the class) and calls the p1_pin or p5_pin factory method with the P1 or P5 pin number having that function. Note that while the functions for P1 pin names should never currently fail as in both current boards revisions the same set of pins connect to GPIO pins, the functions for P5 pin will fail and raise an exception if called while running on a revision 1 board that has no P5 connector.

Originally the pinid module was totally self contained with all the data for mapping and validation of integer pin values defined within the module. Annoyingly having to be hardware-revision aware blows that out of the water. Now the major revision as provided by the dibase.rpi.hwinfo module is used to switch the sets of P1 and P5 connector-pin-to-GPIO-pin maps to those relevant for the board revision in use. In order to do this the /proc/cpuinfo pseudo file has to be interrogated - although once read the data is cached.

Do what, how?

The Python stream I/O open function - similar to the C library fopen function - takes a second parameter specifying the so called mode in which to open the item specified by the file argument. The GPIO open functions, open_pin and open_pingroup, follow this model. There seemed no immediate need for further parameters such as the Python open function’s buffering parameter.

Like the stream I/O open function, the mode parameters of open_pin and open_pingroup are expected to be short strings. From open I kept the 'r' and 'w' characters for read (input) and write (output) modes - although only one can be specified - and added an optional second character that indicates the blocking mode for pin reads - the choices are 'N': none, 'R' : block only until a rising edge transition from low to high, 'F' : block only until a falling edge transition from high to low and 'B' : (for 'both') block until either change transition. The only valid blocking mode for pins opened for writing is none. If the second blocking mode character is not given then it defaults to none. If no characters are given (i.e. an empty string is passed) then the default of non-blocking read mode is used (i.e. it is a synonym for 'rN'); this is the default if no mode argument is given.

When I came to implement pin groups it occurred to me that such groups can be given either as a set of bits in an integer or as a set of Boolean values in a sequence and this added two additional mode characters 'I' : multiplex pin group values into the lower bits of an integer and 'S' : pin values are discrete Boolean values in a sequence. These are notionally the third character in the mode string but can be the second, in which case the blocking mode defaults to none. Note that the format of the data representing a pin group is set when opening a group of pins as each variant is handled by a separate class.

Open unto me…

The open_pin and open_pingroup functions parse the mode string to determine the specific type of object to create and return. The returned object will conform to one of the three abstract base class types GPIOWriterBase, GPIOReaderBase or GPIOBlockingReaderBase, however the types returned from read and passed to write will differ. open_pin, for single pins, always traffics in Boolean values and, when writing, values that can be converted to Boolean via the usual Python conversion rules - with the exception that a string '0' (a zero character) converts to False - i.e. a low pin state. open_pingroup on the other hand traffics either in integer values or in sequences of Boolean values - which when writing each value can be a value convertible to Boolean, with '0' values converting to False.

As implementation proceeded it became obvious that the various modes - direction, blocking and (for pin groups only) data format - had various related aspects. For example direction mode was specified, and needed checking, as a character in open_pin and open_pingroup mode parameter strings; likewise, a given direction mode dictates the string written to a GPIO pin’s sys file system direction controlling file. It seemed natural then to group all of these concerns into a class. Hence there are supporting types DirectionMode, BlockMode, and (for pin groups only) FormatMode that handle the various aspects of each of the modes.

How common!

As mentioned in the pre-amble one of the things I wanted to achieve was to reduce the per-IO call overhead by moving certain repeated operations to a once performed open and, thereby, the inverse operations to a once performed close. In fact the open logic ends up in the __init__ methods of the GPIOWriterBase, GPIOReaderBase or GPIOBlockingReaderBase implementation classes. In order to be good citizens a pin or pin group should close itself when destroyed and support the Python with statement that controls context management - similar to using in C# or RAII in C++. All this adds quite a lot of boiler plate to a class' implementation.

Luckily it turned out that much of this code was common to either the pin or pin group implementations of GPIOWriterBase, GPIOReaderBase and GPIOBlockingReaderBase and so could be pushed up into a common base class: _PinIOBase for pin implementations and _PinGroupIOBase for pin group implementations. This reduced the specific implementation classes to having to usually only implement (an often minimal) ___init___ method and the write or read I/O method. The exception being the PinBlockingReader class which annoyingly needed to add extra validation in the middle of the otherwise common base __init__ processing flow. This was achieved using the template function pattern and having the base __init__ call out to an overridable method called cb_validate_init_parameters which PinBlockingReader overrode to inject its additional logic while the other two pin implementation GPIO classes relied on the default base behaviour.

Some nitty gritty details…

So what exactly did I change in the flow of calls to the sys file system, from that performed by the early RPi.GPIO package?

First I’ll review how to get access to a GPIO pin via the sys file system interface.

A pin has first to be exported by writing its chip GPIO number ([0,53] in this case) to an export file. On doing this the driver creates and populates a pseudo directory for that pin, if it is available - meaning not already exported. When done with a pin it is unexported by writing the GPIO number to an unexport file, which removes the directory created by writing to export.

Once exported the desired direction and, in my package’s case, edge modes are set by writing specific values to specific (pseudo) files in the exported pin’s directory and the file to which values are read or written to opened as appropriate and values can be written or read. Then the file is closed and the pin unexported.

In the early RPi.GPIO package I played with each read or write (input or output) from/to a pin went through the whole process of unexporting if exported, (re-)exporting the pin, setting up the direction (another file open, write and close), writing or reading the value and unexporting the pin.

In my model I export the pin, set the direction and edge modes and open the value file for the pin during object creation via open_pin. The read/write operations do not need to repeat those steps and just get on with the reading or writing the open value file. Then on close the value file is closed and the pin unexported.

I chose a policy that it was an error if a pin were already exported when attempting to open it because it could be exported and used by a separate process in a different way (for output instead of input say) hence the code checks to see if such a directory exists before trying to export a pin and raises a PinInUseError if it is. Note that this is not 100% fool proof as a pin could have its exported state change between the check and actually trying to export the pin. However in most use cases one would expect GPIO pin use to be fairly static and so such a situation should only occur by accident when initially setting up pin / process assignments or during revamps of such assignments.

This policy does have one ramification though: a pin must be unexported when it is finished with otherwise the next execution of the program using the pin will fail as it will still be exported and therefore deemed to be in use. Hence the effort to try to ensure a pin is closed when done.

However, such a situation may arise in which a pin is left exported (especially during development and testing!) so I added a function called force_free_pin which will unexport a specified pin if it is exported and return a Boolean indicator as to whether it did so. However I found that using pin and pin group I/O objects controlled by a with statement to be an effective way of preventing pretty much all such mishaps (I suppose it is possible that the process terminates in a way that is outside the control of the Python runtime which would presumably defeat any clean-up).

Now on the matter of reading and writing high and low values from or to a pin for non blocking reads and writes it is a case of reading or writing '1' or '0' from/to the zeroth position of the open value file. Blocking reads involve using the select system function - which is presented as the Python select.select function - to wait on the value file or timeout expiration and then - if not a timeout - performing a read as per the non-blocking case.

For pin groups, there are six classes implementing pin groups - three trafficking in pin values multiplexed into the lower bits of an integer and three trafficking in sequences of Boolean values. They rely on the single pin types - calling pin_open for each pin in the group and passing in the same mode for each pin. Closing a pin group just iterates through the sequence of open pin objects and calls close on them. As for single pin GPIO objects __del__ and __exit__ call close to ensure all pins in a group are closed on object destruction and on exit from a with clause.

Pin group non-blocking reads are simply a matter of reading each pin and composing the correct type of composite value. Pin group writers cache the current value and use it to only write to pins whose state has changed. The two pin group blocking reader types pass all the group’s pin objects (which support a fileno method) to select.select and so wait on a change to any of the group’s pins. They also use a cached value and only update those pins' values that were indicated as changed from the information returned from select.select.

Odds and sods

As with any set of code, as you go along error conditions pop up and to cater to those in the GPIO package I created a module - gpioerror - for GPIO specific errors. There is a top level exception called GPIOError which sub-classes the Python Exception type from which all the other GPIO specific exceptions derive - although some do not do so directly but sub-class other GPIO exceptions. Currently all the exception classes simply define a static error string as its doc comment and passes its doc comment to its super class in its __init__ method.

I have mentioned that the sys file system for GPIO relies on specific pathnames and values to be written to files. Special values written to files are generally specified by the pin module types that group the related aspects of direction and blocking modes. The sys file system pathname magic strings and knowledge of how to create specific pathnames form a set of functions in the sysfspaths module - some of these are nullary functions (take no arguments) that return static path fragments - the gpio_path function returns the base absolute path to GPIO support in the sys file system for example. Other functions take a pin id value and use this to create and return pin specific paths - the direction_path function returns the path to the direction (pseudo) file for a given pin id number for example.

Testing, testing…

In case you were wondering, yes there are tests and yes they were developed in step with the code they tested. There are two sets of tests - unit tests which, originally at least, do not rely on any modules other than that under test and what I originally called system tests and have subsequently been renamed platform tests to remove ambiguity and are tests that have to be executed on a specific platform- a Raspberry Pi running Raspbian or compatible Linux system in this case. Some of the so-called system tests required user interaction - either to create input (e.g. toggle switches) or observe output (e.g. check on the lit state of an LED). These tests have subsequently been split into tests termed interactive tests. Note that platform and interactive tests are probably sub-types of integration tests, although some of them may tend towards the being system tests.

References

-

gpio.txt from Linux kernel, Raspberry Pi GitHub

(note: for the rpi-3.2.27 branch, use branch selection dropdown to select another) -

Generic support provided by gpiolib (as far as I could tell), Raspberry Pi Foundation GitHub

-

dibase-rpi-python library documentation, Ralph McArdell GitHub

-

Upcoming Raspberry Pi Model B Revision 2.0 board revision post, Raspberry Pi Foundation